Deep Fake Challenge: an Overview

Published:

Here it follows a brief introduction to the Deep Fake Challenge from Kaggle.

Contents

- DeepFake: a threat to democracy or just a little bit of fun?

- What is Kaggle?

- Somebody said GAN? A dive into DeepFakes creation

- DeepFake Detection Challenge

- Preliminary data exploration

- Detection starter kit

- Metadata exploration

- FFMPEG and FFPROBE

- Videos exploration

- Frames Extraction

- Face detection

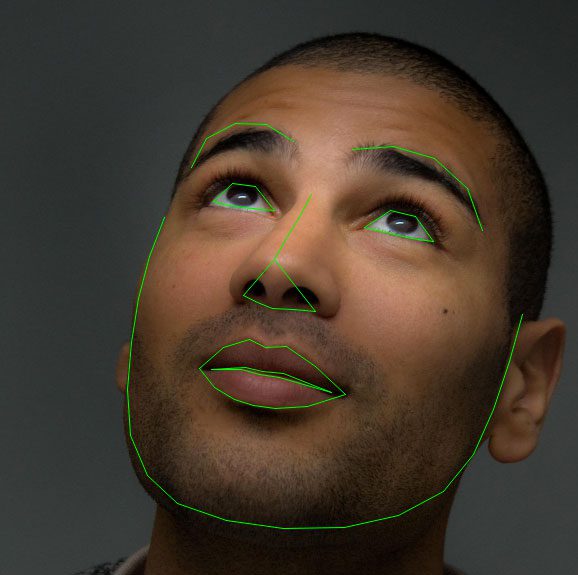

- Facial landmarks

- References

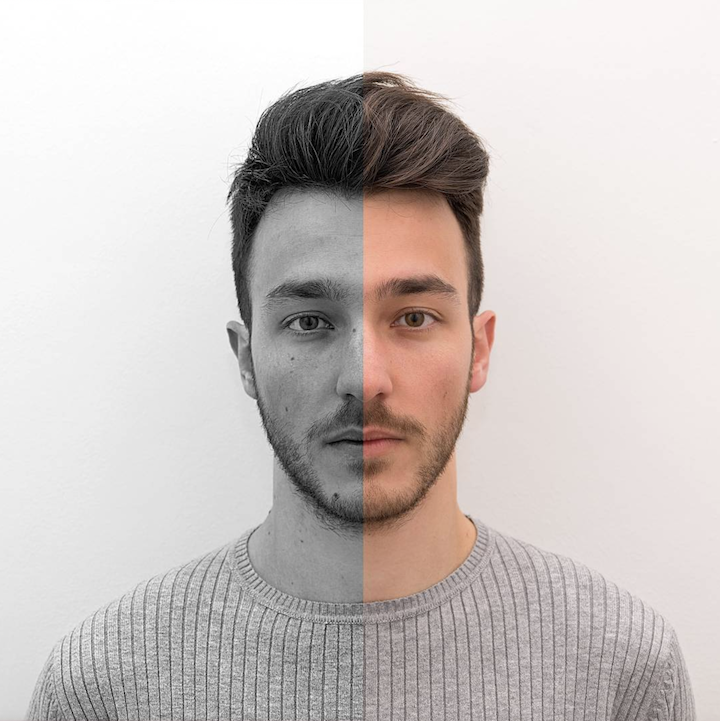

DeepFake: a threat to democracy or just a bit of fun?

“We are already at the point where you can’t tell the difference between deepfakes and the real thing,” Professor Hao Li, University of Southern California

Facebook has announced it will remove videos modified by artificial intelligence, known as deepfakes, from its platform.

from IPython.lib.display import YouTubeVideo

# Youtube https://www.youtube.com/watch?v=cQ54GDm1eL0

YouTubeVideo('cQ54GDm1eL0', width= 600, height= 400)

What is Kaggle?

Kaggle is an AirBnB for Data Scientists – this is where they spend their nights and weekends. It’s a crowd-sourced platform to attract, nurture, train and challenge data scientists from all around the world to solve data science, machine learning and predictive analytics problems. It has over 536,000 active members from 194 countries and it receives close to 150,000 submissions per month. Started from Melbourne, Australia Kaggle moved to Silicon Valley in 2011, raised some 11 million dollars from the likes of Hal Varian (Chief Economist at Google), Max Levchin (Paypal), Index and Khosla Ventures and then ultimately been acquired by the Google in March of 2017. Kaggle is the number one stop for data science enthusiasts all around the world who compete for prizes and boost their Kaggle rankings. There are only 94 Kaggle Grandmasters in the world to this date.

Do you know that most data scientists are only theorists and rarely get a chance to practice before being employed in the real-world? Kaggle solves this problem by giving data science enthusiasts a platform to interact and compete in solving real-life problems. The experience you get on Kaggle is invaluable in preparing you to understand what goes into finding feasible solutions for big data.

Fine, fine, fine but what do we do on Kaggle? We Learn

A dive into DeepFakes creation: somebody said GAN?

Deepfakes are fakes generated by deep learning. So far so easy.

This usually means someone used a generative model like an AutoEncoder or most likely a Generative Adversarial Network, short GAN. GANs are technically two networks that work against each other, illustrated below. The artist (generator) draws its inspiration from a noise sample and creates a rendering of the data you are trying to generate with said GAN. The private investigator (discriminator) randomly gets assigned real and fake data to investigate.

The learning process is collaborative. The generator gets better at fooling the discriminator and the discriminator gets better at figuring out which data is real and which isn’t. In mathematical terms they are learning until a Nash equilibrium is reached, which means neither can learn new tricks and get better. They’re a really cool concept and even used in scientific simulation at CERN.

You can probably guess that they can be tricky to train, due to so many moving parts. This has become a very popular area of research, warranting a GAN Zoo of all named GANs. Some important stuff you may want to check out if your interested are keywords like Wasserstein GANs, Gradient Penalization, Attention, and in this context Style Transfer (namely face2face).

It sounds absurd, I know. Here you can find some more practical examples, why don’t you play with them for a while?

DeepFake Detection Challenge

I strongly encourage you to start first with the official Getting Started guide here.

What is the goal of the Deepfake Detection Challenge? According to the FAQ “The AI technologies that power deepfakes and other tampered media are rapidly evolving, making deepfakes so hard to detect that, at times, even human evaluators can’t reliably tell the difference. The Deepfake Detection Challenge is designed to incentivize rapid progress in this area by inviting participants to compete to create new ways of detecting and preventing manipulated media.”

- In this Code Competition:

- CPU Notebook <= 9 hours run-time, GPU Notebook <= 9 hours run-time on Kaggle’s P100 GPUs, No internet access enabled

- External data is allowed up to 1 GB in size. External data must be freely & publicly available, including pre-trained models

- This code competition’s training set is not available directly on Kaggle, as its size is prohibitively large to train in Kaggle. Instead, it’s strongly recommended that you train offline and load the externally trained model as an external dataset into Kaggle Notebooks to perform inference on the Test Set. Review Getting Started for more detailed information.

Scoring

Submissions are scored on log loss:

where:

- n is the number of videos being predicted

- y^i is the predicted probability of the video being FAKE

- yi is 1 if the video is FAKE, 0 if REAL

- log() is the natural (base e) logarithm

# Sklearn Implementation

from sklearn.metrics import log_loss

log_loss(["REAL", "FAKE", "FAKE", "REAL"],

[[.1, .9], [.9, .1], [.8, .2], [.35, .65]])

Data

- We have a bunch of .mp4 files, split into compressed sets of ~10GB a piece. A metadata.json accompanies each set of .mp4 files, and contains filename, label (REAL/FAKE), original and split columns, listed below under Columns.

- The full training set is just over 470 GB (Yeah it’s huge !).

References: https://deepfakedetectionchallenge.ai/faqs

Dataset Description

There are 4 groups of datasets associated with this competition.

Training Set: This dataset, containing labels for the target, is available for download for competitors to build their models. It is broken up into 50 files, for ease of access and download. Due to its large size, it must be accessed through a GCS bucket which is only made available to participants after accepting the competition’s rules. Please read the rules fully before accessing the dataset, as they contain important details about the dataset’s permitted use. It is expected and encouraged that you train your models outside of Kaggle’s notebooks environment and submit to Kaggle by uploading the trained model as an external data source.

Public Validation Set: When you commit your Kaggle notebook, the submission file output that is generated will be based on the small set of 400 videos/ids contained within this Public Validation Set. This is available on the Kaggle Data page as test_videos.zip

Public Test Set: This dataset is completely withheld and is what Kaggle’s platform computes the public leaderboard against. When you “Submit to Competition” from the “Output” file of a committed notebook that contains the competition’s dataset, your code will be re-run in the background against this Public Test Set. When the re-run is complete, the score will be posted to the public leaderboard. If the re-run fails, you will see an error reflected in your “My Submissions” page. Unfortunately, we are unable to surface any details about your error, so as to prevent error-probing. You are limited to 2 submissions per day, including submissions with errors.

Private Test Set: This dataset is privately held outside of Kaggle’s platform, and is used to compute the private leaderboard. It contains videos with a similar format and nature as the Training and Public Validation/Test Sets, but are real, organic videos with and without deepfakes. After the competition deadline, Kaggle transfers your 2 final selected submissions’ code to the host. They will re-run your code against this private dataset and return prediction submissions back to Kaggle for computing your final private leaderboard scores.

import pandas as pd

import numpy as np

import glob, shutil

import timeit, os, gc

import subprocess as sp

from tqdm import tqdm

from collections import defaultdict

import json

from IPython.display import HTML

from base64 import b64encode

import cv2

pd.set_option('display.max_colwidth', -1)

pd.set_option('display.max_columns', 500)

pd.set_option('display.max_rows', 4000)

import matplotlib.pyplot as plt

%matplotlib inline

import seaborn as sns

sns.set(font_scale=1.0)

Review of Data Files Accessible within kernel

Files

- train_sample_videos.zip - a ZIP file containing a sample set of training videos and a metadata.json with labels. the full set of training videos is available through the links provided above.

- sample_submission.csv - a sample submission file in the correct format.

- test_videos.zip - a zip file containing a small set of videos to be used as a public validation set. To understand the datasets available for this competition, review the Getting Started information.

Metadata Columns

- filename - the filename of the video

- label - whether the video is REAL or FAKE

- original - in the case that a train set video is FAKE, the original video is listed here

- split - this is always equal to “train”.

train_sample_metadata = pd.read_json('/Volumes/DFChallenge/deepfake-detection-challenge/train_sample_videos/metadata.json').T

train_sample_metadata.head()

# Get percentage

norm_constant = train_sample_metadata.groupby('label')['label'].count()[0] + train_sample_metadata.groupby('label')['label'].count()[1]

(train_sample_metadata.groupby('label')['label'].count()/norm_constant).plot(figsize=(15, 5), kind='bar', title='Distribution of Labels in the Training Set')

plt.show()

Preliminary data exploration

Detection Starter Kit

A quickstart guide on DeepFakes: “DeepFakes and Beyond: A Survey of Face Manipulation and Fake Detection

This CPU-only kernel is a Deep Fakes video EDA. It relies on static FFMPEG to read/extract data from videos.

- It extracts meta-data. They help us to know frame rate, dimensions and audio format (we can forget leak of “display_ratio” as it will be fixed).

- It extracts frames of videos as PNG.

- It extracts audio track as AAC (disabled).

- It compares a few face detectors (OpenCV HaarCascade, MTCNN). More to come (Yolo, BlazeFace, DLib, Faced, …).

- It provides basic statistics on faces per video, face width/height and face detection confidence. It computes an average face width/height.

We notice that face detection (with OpenCV currently) is far from being perfect. An additional stage to clean-up detected faces is required before training a model! Maybe some kind of votes/ensemble with different detectors would help.

In this kernel you will see also some interesting edge cases of face detection:

- Face detected on a t-shirt.

- Face detected on a background board.

- Face detected inside a face.

Metadata Exploration

FFMPEG and FFPROBE

HOME = "./"

FFMPEG = "/usr/local/Cellar/ffmpeg/4.1.3_1/bin"

FFMPEG_PATH = FFMPEG

DATA_FOLDER = "/Volumes/DFChallenge/deepfake-detection-challenge"

TMP_FOLDER = DATA_FOLDER

DATA_FOLDER_TRAIN = DATA_FOLDER

VIDEOS_FOLDER_TRAIN = DATA_FOLDER_TRAIN + "/train_sample_videos"

IMAGES_FOLDER_TRAIN = TMP_FOLDER + "/images"

AUDIOS_FOLDER_TRAIN = TMP_FOLDER + "/audios"

EXTRACT_META = True # False

EXTRACT_CONTENT = True # False

EXTRACT_FACES = True # False

FRAME_RATE = 0.2 # Frame per second to extract (max is 30.0)

What is ffprobe indeed? Basically, ffprobe gathers information from multimedia streams and prints it in human - and machine - readable fashion.

def run_command(*popenargs, **kwargs):

closeNULL = 0

try:

from subprocess import DEVNULL

closeNULL = 0

except ImportError:

import os

DEVNULL = open(os.devnull, 'wb')

closeNULL = 1

process = sp.Popen(stdout=sp.PIPE, stderr=DEVNULL, *popenargs, **kwargs)

output, unused_err = process.communicate()

retcode = process.poll()

if closeNULL:

DEVNULL.close()

if retcode:

cmd = kwargs.get("args")

if cmd is None:

cmd = popenargs[0]

error = sp.CalledProcessError(retcode, cmd)

error.output = output

raise error

return output

def ffprobe(filename, options = ["-show_error", "-show_format", "-show_streams", "-show_programs", "-show_chapters", "-show_private_data"]):

ret = {}

command = [FFMPEG_PATH + "/ffprobe", "-v", "error", *options, "-print_format", "json", filename]

ret = run_command(command)

if ret:

ret = json.loads(ret)

return ret

# ffmpeg -i input.mov -r 0.25 output_%04d.png

def ffextract_frames(filename, output_folder, rate = 0.25):

command = [FFMPEG_PATH + "/ffmpeg", "-i", filename, "-r", str(rate), "-y", output_folder + "/output_%04d.png"]

ret = run_command(command)

return ret

# ffmpeg -i input-video.mp4 output-audio.mp3

def ffextract_audio(filename, output_path):

command = [FFMPEG_PATH + "/ffmpeg", "-i", filename, "-vn", "-ac", "1", "-acodec", "copy", "-y", output_path]

ret = run_command(command)

return ret

%%time

js = ffprobe(VIDEOS_FOLDER_TRAIN + "/"+ "bqdjzqhcft.mp4")

print(json.dumps(js, indent=4, sort_keys=True))

# Extract some meta-data

if EXTRACT_META == True:

results = []

subfolder = VIDEOS_FOLDER_TRAIN

filepaths = glob.glob(subfolder + "/*.mp4")

for filepath in tqdm(filepaths):

js = ffprobe(filepath)

if js:

results.append(

(js.get("format", {}).get("filename")[len(subfolder) + 1:],

js.get("format", {}).get("format_long_name"),

# Video

js.get("streams", [{}, {}])[0].get("codec_name"),

js.get("streams", [{}, {}])[0].get("height"),

js.get("streams", [{}, {}])[0].get("width"),

js.get("streams", [{}, {}])[0].get("nb_frames"),

js.get("streams", [{}, {}])[0].get("bit_rate"),

js.get("streams", [{}, {}])[0].get("duration"),

js.get("streams", [{}, {}])[0].get("start_time"),

js.get("streams", [{}, {}])[0].get("avg_frame_rate"),

# Audio

js.get("streams", [{}, {}])[1].get("codec_name"),

js.get("streams", [{}, {}])[1].get("channels"),

js.get("streams", [{}, {}])[1].get("sample_rate"),

js.get("streams", [{}, {}])[1].get("nb_frames"),

js.get("streams", [{}, {}])[1].get("bit_rate"),

js.get("streams", [{}, {}])[1].get("duration"),

js.get("streams", [{}, {}])[1].get("start_time")),

)

meta_pd = pd.DataFrame(results, columns=["filename", "format", "video_codec_name", "video_height", "video_width",

"video_nb_frames", "video_bit_rate", "video_duration", "video_start_time","video_fps",

"audio_codec_name", "audio_channels", "audio_sample_rate", "audio_nb_frames",

"audio_bit_rate", "audio_duration", "audio_start_time"])

meta_pd["video_fps"] = meta_pd["video_fps"].apply(lambda x: float(x.split("/")[0])/float(x.split("/")[1]) if len(x.split("/")) == 2 else None)

meta_pd["video_duration"] = meta_pd["video_duration"].astype(np.float32)

meta_pd["video_bit_rate"] = meta_pd["video_bit_rate"].astype(np.float32)

meta_pd["video_start_time"] = meta_pd["video_start_time"].astype(np.float32)

meta_pd["video_nb_frames"] = meta_pd["video_nb_frames"].astype(np.float32)

meta_pd["video_bit_rate"] = meta_pd["video_bit_rate"].astype(np.float32)

meta_pd["audio_sample_rate"] = meta_pd["audio_sample_rate"].astype(np.float32)

meta_pd["audio_nb_frames"] = meta_pd["audio_nb_frames"].astype(np.float32)

meta_pd["audio_bit_rate"] = meta_pd["audio_bit_rate"].astype(np.float32)

meta_pd["audio_duration"] = meta_pd["audio_duration"].astype(np.float32)

meta_pd["audio_start_time"] = meta_pd["audio_start_time"].astype(np.float32)

meta_pd.to_pickle(HOME + "videos_meta.pkl")

else:

meta_pd = pd.read_pickle(HOME + "videos_meta.pkl")

meta_pd.head()

fig, ax = plt.subplots(1,6, figsize=(22, 3))

d = sns.distplot(meta_pd["video_fps"], ax=ax[0])

d = sns.distplot(meta_pd["video_duration"], ax=ax[1])

d = sns.distplot(meta_pd["video_width"], ax=ax[2])

d = sns.distplot(meta_pd["video_height"], ax=ax[3])

d = sns.distplot(meta_pd["video_nb_frames"], ax=ax[4])

d = sns.distplot(meta_pd["video_bit_rate"], ax=ax[5])

A few info on bitrate

train_pd = pd.read_json(VIDEOS_FOLDER_TRAIN + "/metadata.json").T.reset_index().rename(columns={"index": "filename"})

train_pd.head()

train_pd = pd.merge(train_pd, meta_pd[["filename", "video_height", "video_width", "video_nb_frames", "video_bit_rate", "audio_nb_frames"]], on="filename", how="left")

train_pd["count"] = train_pd.groupby(["original"])["original"].transform('count')

# train_pd.to_pickle(HOME + "train_meta.pkl")

train_pd.head()

Videos Exploration

Frames Extraction

videos_folder = VIDEOS_FOLDER_TRAIN

images_folder_path = IMAGES_FOLDER_TRAIN

audios_folder_path = AUDIOS_FOLDER_TRAIN

"""# We're only interested in Frames --> uncomment ffextract_audio if you need it

AUDIO_FORMAT = "wav" # "aac"

videos_folder = VIDEOS_FOLDER_TRAIN

images_folder_path = IMAGES_FOLDER_TRAIN

audios_folder_path = AUDIOS_FOLDER_TRAIN

if EXTRACT_CONTENT == True:

# 1h20min for chunk#0 (11GB)

# Extract some images + audio track

for idx, row in tqdm(train_pd.iterrows(), total=meta_pd.shape[0]):

try:

video_path = videos_folder + "/" + row["filename"]

images_path = images_folder_path + "/" + row["filename"][:-4]

audio_path = audios_folder_path + "/" + row["filename"][:-4]

# Extract images

if not os.path.exists(images_path): os.makedirs(images_path)

ret = ffextract_frames(video_path, images_path, rate = FRAME_RATE)

# Extract audio

if not os.path.exists(audio_path): os.makedirs(audio_path)

# ret = ffextract_audio(video_path, audio_path + "/audio." + AUDIO_FORMAT)

except:

print("Cannot extract frames/audio for:" + row["filename"])

""";

train_pd.tail()

# Preview Fake/Real (this one is obvious)

idx = 21 # 27 # 21 # 19 # 12 # 6

fake = train_pd["filename"][idx]

real = train_pd["original"][idx]

vid_width = train_pd["video_width"][idx]

vid_real = open(VIDEOS_FOLDER_TRAIN + "/" + real, 'rb').read()

data_url_real = "data:video/mp4;base64," + b64encode(vid_real).decode()

vid_fake = open(VIDEOS_FOLDER_TRAIN + "/" + fake, 'rb').read()

data_url_fake = "data:video/mp4;base64," + b64encode(vid_fake).decode()

HTML("""

<div style='width: 100%%; display: table;'>

<div style='display: table-row'>

<div style='width: %dpx; display: table-cell;'><b>Real</b>: %s<br/><video width=%d controls><source src="%s" type="video/mp4"></video></div>

<div style='display: table-cell;'><b>Fake</b>: %s<br/><video width=%d controls><source src="%s" type="video/mp4"></video></div>

</div>

</div>

""" % ( int(vid_width/3.2) + 10,

real, int(vid_width/3.2), data_url_real,

fake, int(vid_width/3.2), data_url_fake))

Face Detection

# OpenCV face detector

face_cascade = cv2.CascadeClassifier(cv2.data.haarcascades + "haarcascade_frontalface_default.xml")

def detect_face_cv2(img):

# Move to grayscale

gray_img = cv2.cvtColor(img.copy(), cv2.COLOR_RGB2GRAY)

face_locations = []

face_rects = face_cascade.detectMultiScale(gray_img, scaleFactor=1.3, minNeighbors=5)

for (x,y,w,h) in face_rects:

face_location = (x,y,w,h)

face_locations.append((face_location, 1.0))

return face_locations

# return ((x,y,w,h, confidence))

def extract_faces(files, source, detector=detect_face_cv2):

results = []

# for idx, file in tqdm(enumerate(files), total=len(files)):

for idx, file in enumerate(files):

try:

img = cv2.cvtColor(cv2.imread(file, cv2.IMREAD_UNCHANGED), cv2.COLOR_BGR2RGB)

face_locations = detector(img)

results.append((source, file[file.find("output_"):], face_locations, len(face_locations)))

except:

print("Cannot extract faces for image: %s" % file)

return results

file = fake

dump_folder = images_folder_path + "/" + file[:-4]

files = glob.glob(dump_folder + "/*")

DETECTORS = {

"cv2": detect_face_cv2,

#"mtcnn": detect_face_mtcnn

}

faces_pd = None

for key, value in DETECTORS.items():

tmp_pd = pd.DataFrame(extract_faces(files, file, detector=value), columns=["filename", "image", "boxes_" + key , "faces_" + key])

if faces_pd is None:

faces_pd = tmp_pd

else:

faces_pd = pd.merge(faces_pd, tmp_pd, on=["filename", "image"], how="left")

faces_pd.head(12)

# Plot faces extracted images

def plot_faces_boxes(df, max_cols = 2, max_rows = 6, fsize=(24, 5), max_items=12):

idx = 0

for item_idx, item in df.iterrows():

img = cv2.cvtColor(cv2.imread(IMAGES_FOLDER_TRAIN + "/" + item["filename"][:-4] +"/" + item["image"], cv2.IMREAD_UNCHANGED), cv2.COLOR_BGR2RGB)

face_img = img #.copy()

# grid subplots

row = idx // max_cols

col = idx % max_cols

if col == 0: fig = plt.figure(figsize=fsize)

ax = fig.add_subplot(1, max_cols, col + 1)

ax.axis("off")

# display image with boxes

cols = [c for c in df.columns if "boxes" in c]

for i, c in enumerate(cols, 0):

face_locations = item[c]

face_confidence = item[c]

if len(face_locations) > 0:

for face_location in face_locations:

((x,y,w,h), confidence) = face_location

# face_img = face_img[y:y+h, x:x+w]

cv2.rectangle(face_img, (x, y), (x+w, y+h), (255,i*255,0), 8)

cv2.putText(face_img, '%.1f' % (confidence*100.0), (x+w, y+h), cv2.FONT_HERSHEY_SIMPLEX, 2.0, (255,i*255,0), 9, cv2.LINE_AA)

ax.imshow(face_img)

else:

ax.imshow(img)

ax.set_title("%s %s / %s - Faces: %d %s %s" % (item["label"] if "label" in df.columns else "",

item["filename"], item["image"],

item["faces_cv2"] if "faces_cv2" in df.columns else len(face_locations),

item["faces_cv2_median"] if "faces_cv2_median" in df.columns else "",

item["faces"] if "faces" in df.columns else ""))

if (col == max_cols -1): plt.show()

idx = idx + 1

if (max_items > 0 and idx >=max_items): break

# Compare face boxes detected by OpenCV and MTCNN

plot_faces_boxes(faces_pd)

Facial Landmarks

What are facial landmarks?

Detecting facial landmarks is a subset of the shape prediction problem. Given an input image (and normally an ROI that specifies the object of interest), a shape predictor attempts to localize key points of interest along the shape.

In the context of facial landmarks, our goal is detect important facial structures on the face using shape prediction methods.

Detecting facial landmarks is therefore a two step process:

- Step 1: Localize the face in the image (WE DID IT!)

- Step 2: Detect the key facial structures on the face ROI.

import dlib ## toDo

import sys

sys.executable

sys.path

References

- Deepfake, Wikipedia

- Google DeepFake Database, Endgadget

- A quick look at the first frame of each video, from Kaggle

- Basic EDA Face Detection, split video, ROI, from Kaggle

- Face Detection with OpenCV, from Kaggle

- Play video and processing, from Kaggle